Artificial Intelligence is changing the world. And yet, still too often, the conversations are dominated by men: whether it is creating the algorithms that support the day to day uses of AI, or discussing the politics of this fast-changing world.

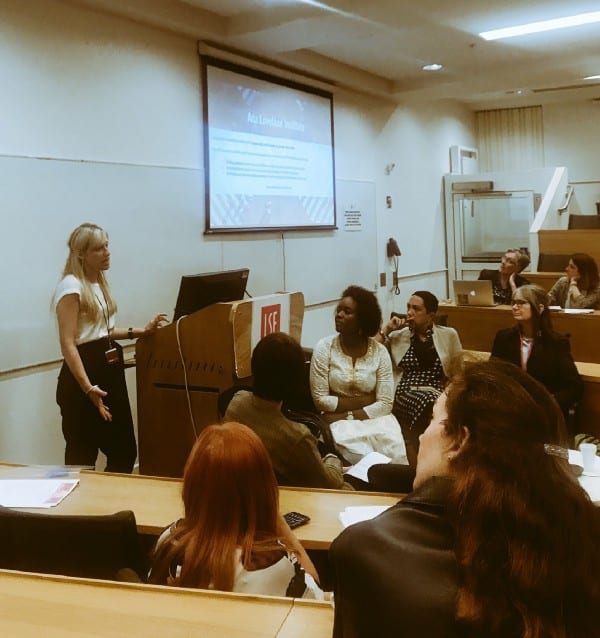

Our CEO (Europe) Alexsis Wintour recently attended the Women Leading in AI Conference 2018 at the London School of Economics. The conference brought together female thinkers, scientists, academics, businesswomen and politicians to discuss the future of AI – the ethics, bias and governance framework as well as opportunities and challenges for the future.

From left to right: Sue Daley, Head of Programme, Cloud, Data, Analytics & AI, TechUK, Tabitha Goldstaub, CognitionX, Zoe Webster, Head of Portfolio, InnovateUK, Eleonora Harwich, Head of Digital and Technological Innovation, Reform, Huma Lodhi, Principal Data Scientist, Direct Line Group.

Whether you know you are using Artificial Intelligence, or not, the fact remains that this technology is happening – in every part of our lives. After a day discussing the impact that this has on women, not only personally, but professionally, socially, and economically, it’s clear we need to be clearer.

WHY ARE WOMEN SO WORRIED?

Few women are working in Artificial Intelligence. Not only does this make it difficult to represent female views equally, it raises other ethical questions. For example, take the situation where there may be a bias within the data used to train an AI. Is it possible that there may be a poor representation of female data because it doesn’t exist yet? We know any answer that an AI gives will be based on the information put into it. Who will be responsible for checking what the machines are learning?

Catherine Colebrook, IPPR, Chief Economist and Associate Director for Economic Policy highlights research that suggests that women are less likely to look for different jobs. Their attachment to the labour market is less intense. In reality, this translates to them being unlikely to retrain to seek out new types of work. As women occupy a significant proportion of low skilled jobs, the threat of them being left behind as automation takes over becomes increasingly likely.

Something needs to be done and women need to take action now. Holly Rafique, Digital Skills & Content Lead at #techmums helps to address this issue and works to help mums increase their ability to use technology, predominantly in disadvantaged areas, to improve their, and their children’s lives.

FREAKING OUT OR FREAKING ON?

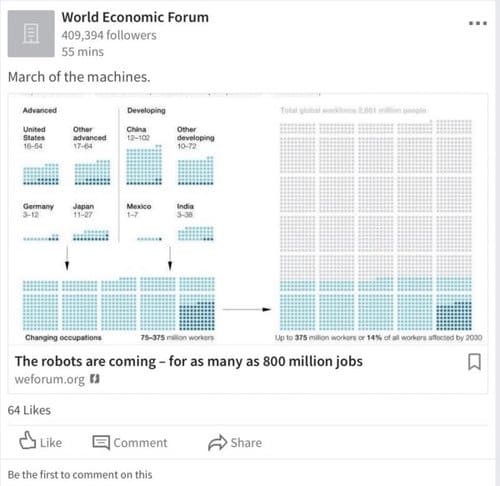

The press would have us believe that there is no other way than doomsday. The automation of everything is paired with fatal consequences for the job market. Moreover, this isn’t in the too distant future…

The World Economic Forum reported a study predicting that more than a fifth of the global labour force [800 million workers ], might lose their jobs because of automation in the next decade.

Sue Daley, Head of Programme, Cloud, Data, Analytics & AI, TechUK advocates a more moderate view, looking for the good use of AI [and Sue, for you I have not included any apocalyptic “Machines are taking over the world” photos]. A small example is Denmark using Corti — an AI to help call handlers to detect whether someone is having a heart attack.

If you get a chance, check out what Jade Leung at the Future of Humanity does on big-picture thinking around the governance of Artificial Intelligence for the long term. There are many, many more examples and Eleonora Harwich, Head of Digital and Technological Innovation, Reform, looks at examples of innovative use in the Public Sector, such as predictive policing and the Serious Fraud Office use to detect large-scale bribery in Rolls Royce.

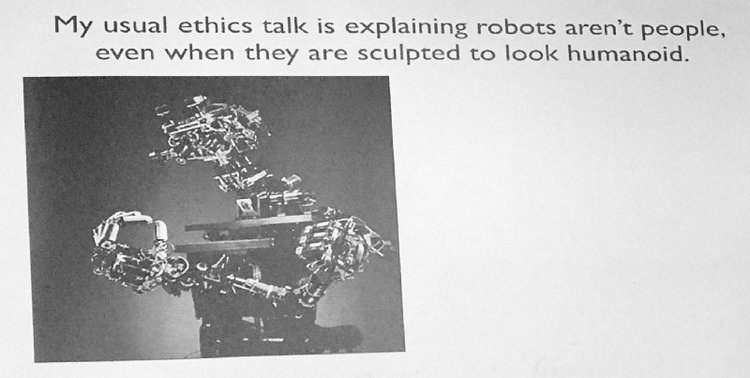

Perhaps, part of the problem is our human desire to procreate in whatever form we can. We have and will continue to re-create ourselves in machines. However, too much anthropomorphism isn’t a great thing. Prof. Joanna Bryson, Associate Professor, Department of Computing at the University of Bath points out;

“Only humans can be dissuaded by human justice — AI is a machine and doesn’t care if it is stuck in solitary confinement — don’t think you can generalise from human and assume an AI can be a legal person”

— Prof. Joanna Bryson, Associate Professor, Department of Computing, University of Bath

Prof. Joanna Bryson asking, “Why do we make robots look like humans?”

WHAT PROBLEMS IS AI SOLVING AND CREATING?

Kate Bell, Head of Economic and Social affairs department, TUC suggests that the UK has three pressures happening all at once:

1. A problem with productivity — it costs more to make less nowadays;

2. More insecurity in jobs; and

3. A high number of people in employment

Technology does not solve all of them… so where does it help? Certainly not in productivity, e.g. Slack replaced email but now we spend more time on Slack. To understand where it’s impacting the economy and society it’s worth discussing questions such as:

– Will Artificial Intelligence add another dimension to these problems, solve them, or add to them?

– Will it usher in an era of no jobs or more jobs, or just different types of jobs? It’s possible that technology causes a higher number of people to be in employment — but isn’t this opposite to what the research suggests?

You’ll find very quickly a philosophical debate surfaces with questions such as:

– What happens if disadvantaged groups don’t have a say in the technology created?

– Shouldn’t algorithms be checked for bias?

– Should it be mandatory that AI should reflect the diversity of the users it serves?

Enron has a fabulous code of ethics which was completely ignored. Enron overstated its earnings by several hundred million dollars.

Dr Paula Boddington’s view, who is the Senior Researcher on, ‘Towards a code of ethics for artificial intelligence research’, Department of Computer Science, Oxford University, is that ethics in AI is so interesting because it asks us simple, fundamentally important questions which are very difficult to answer.

Such as: How do we live our lives?

Enron has a fabulous code of ethics which was completely ignored. Enron overstated its earnings by several hundred million dollars.

DOES GOOD USE OF AI START WITH OUR CHILDREN?

Growing up in our world today means experiencing frictionless AI, it is pervasive and omnipresent. We are beginning to, and will, use it seamlessly and eventually unknowingly.

Upstream work with children is, therefore, essential. Seema Malhotra, MP states that;

“Familiarity, connectivity, ethical use, and curiousness will all be hallmarks of educational success if done well”.

— Seema Malhotra, MP

This begins with significant investment from government and interest from many sectors. For example, Seyi Akiwowo, from Glitch!UK (ending online abuse and harassment) has a schools programme teaching children about how to be good digital citizens, promoting fair behaviour online.

Seema Malhotra, MP asks : “What are we thinking about young people in school today? They will be leaders in our workplace of the future”.

— Seema Malhotra, MP

Seema Malhotra MP, President of the Fabian Women’s Network, Kate Bell, Head of Economic and Social affairs department, TUC , Jade Leung, DPhil, Governance of Artificial Intelligence, Future of Humanity

What does this mean for our future job hunters? Will they be hired:

– Because of their ability to use an AI? Will they and their AI be interviewed together for the job?

– Because they can use AI? Will the ones better versed in the technology be hired?

– Jobs of the future are only beginning to just exist today such as a Personality Trainers for Chatbots, Augmented Reality Journey Builders, and Personal Memory Curators. All of them require AI application knowledge. Does this mean children who can afford more and better technology get hired more?

Seema Malhotra MP, President of the Fabian Women’s Network, Kate Bell, Head of Economic and Social affairs department, TUC , Jade Leung, DPhil, Governance of Artificial Intelligence, Future of Humanity

WHAT MAKES FOR A GOOD AI IMPLEMENTATION?

There’s no time for an in-depth review of an approach as to what will make AI implementations fit for humankind and right for your organisation. But, there is one absolute truth — it all starts with your data. The EU and following countries can now enforce standards for better personal data use — this has created a step-change, re-educating individual’s as to what their data means both personally, and as part of wider groups.

Summary questions for AI implementation preparation:

In summary, you will need to think about essential elements as part of your approach:

– Create and adopt a set of principles for ethical AI use which address a wide picture of the society in which you operate and your organisation;

– Translate AI principles into your organisation’s methodology for governing work, including within the risk and compliance areas — make it a living part of your organisation’s approach to Corporate Governance;

– See Human and Non-Human Talent Management as a board-level issue, not an IT Manager’s issue — Your talent pool is a mix of this, probably already — make sure your workforce planning and talent strategies include this analysis and detail the relevant actions;

– Look at employment issues and the overall legal employment framework in which you are operating;

– Develop AI within your Digital Strategy and ensure your organisation is ready, attitudinally as well as practically. Look at your Data And Information Management Strategy. Is your data still in paper form? If electronic, is it clean, compliant with Data Protection Legislation and ready? Make sure you know what the problem that you are trying to solve? New skills will be needed — include these in your Training Needs Analysis Audit;

– Educate the Executive Board and Senior Management Team now, so that they begin to role model the right behaviours and attitudes in line with your principles;

– Understand how the adoption of AI is happening in your organisation. [Make sure you know how much automation is happening, is your approach desirable? Where are you sourcing your AIs? Do you know the algorithms they use? How you test source data, account for bias and above all make sure that outputs are understood and interpreted correctly? Do you know your personal data processes including retention and deletion? Do you know how much revenue and profit is created from the use of these tools in your organisation.];

-Assess your Corporate Social Responsibility programmes in light of the impact of this technology, in the communities within which you operate.

OU’RE NOT ON YOUR OWN — HELP IS ARRIVING

The Ada Lovelace Institute is being established in the UK to “ensure the power of data, algorithms and artificial intelligence is harnessed for social good. It will promote more informed public dialogue about the impact of these technologies on different groups in society, guide ethical practice in their development and deployment, and undertake research to lay the foundations for a data-driven society with wellbeing at its core”

Whilst it’s early days and the full remit and functions of the Institute develop Imogen Parker, Head of Justice, Citizens and Digital Society Programmes at The Nuffield Foundation, asks us to consider four important questions:

Imogen Parker’s Questions from the Ada Lovelace Foundation

Key ethical and governance challenges

1. How can we build a public evidence base on how data and AI affect people, groups in society and society as a whole?

2. How can we create more powerful public voices to shape practice?

3. How can we embed ethical practice for those developing and deploying AI?

4. How can we articulate a positive vision for the type of society we want, and lay the political, economic and social foundations to create it?

If you haven’t seen the Festival of Ai, CogX, that’s on in London soon check out the lineup — it’s going to be fabulous and many of these questions are scheduled to be debated there.

Imogen Parker’s Questions from the Ada Lovelace Foundation

IS THIS JUST A WOMEN’S ISSUE?

Women’s issues are men’s too — in fact, many people of all genders identify with the condition that women find themselves in. This is just one vehicle towards a much needed, wider debate. Prof. Joanna Bryson reminds everyone that disparity of any group often mirrors the condition of women.

“It’s important we gather all people together to debate the issue of ethics in Artificial Intelligence”.

— Prof. Joanna Bryson, Associate Professor, Department of Computing, University of Bath

Ivana Bartoletti, Chair of Fabian Women’s Network

Personally, I highly recommend joining in the discussion. The more minds, disciplines, views and input the better.

The event was enthusiastically and brilliantly organised by, Dr Allison Gardner, Teaching Fellow in Bioinformatics, Keele University and Councillor, Newcastle-under-Lyme Council. And exuberantly and inspiringly chaired by Ivana Bartoletti, Chair of Fabian Women’s Network of the Fabian Institute.

Ivana Bartoletti, Chair of Fabian Women’s Network

ABOUT THE AUTHOR OF THIS BLOG:

“I happen to be fortunate to work with a team who are strong advocates of using technology fairly and ethically.

However, I know I’m one of the lucky ones, so this story is dedicated to progressing this topic, for the good of humankind”.

— Alexsis Wintour, CEO HumAIn Resources, and CEO Europe, Marbral Advisory,